PEPC

The PEPC project (Pretty Efficient Parallel Coulomb Solver) is a public tree code that has been developed at Jülich Supercomputing Centre since the early 2000s. Our code is a non-recursive version of the Barnes-Hut algorithm, using a level-by-level approach to both tree construction and traversals.

Cite this software

Description

Algorithm

The oct-tree method was originally introduced by Josh Barnes & Piet Hut in the mid 1980s to speed up astrophysical N-body simulations with long range interactions, see Nature 324, 446 (1986). Their idea was to use successively larger multipole-groupings of distant particles to reduce the computational effort in the force calculation from the usual O(N2) operations needed for brute-force summation to a more amenable O(N log N). Though mathematically less elegant than the Fast Multipole Method, the Barnes-Hut algorithm is well suited to dynamic, nonlinear problems, can be quickly adapted to numerous interaction kernels and combined with multiple-timestep integrators.

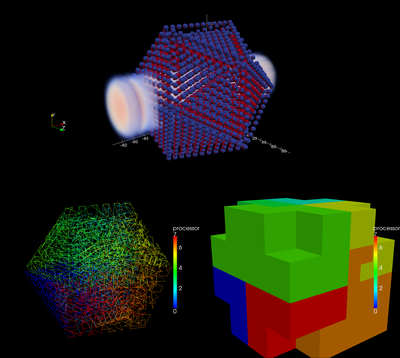

Simulation of interaction of laser radiation with neutral nanocluster with 3871 ions and electrons. Bottom left: spacefilling Hilbert curve used in the code for domain decomposition; Bottom right: Oct-tree domain decomposition represented by the so-called branch nodes.

The PEPC project (Pretty Efficient Parallel Coulomb Solver) is a public tree code that has been developed at Jülich Supercomputing Centre since the early 2000s. Our tree code is a non-recursive version of the Barnes-Hut algorithm, using a level-by-level approach to both tree construction and traversals. The parallel version is a hybrid MPI/pthreads implementation of the Warren-Salmon 'Hashed Oct-Tree' scheme, including several refinements of the tree traversal routine - the most challenging component in terms of scalability.

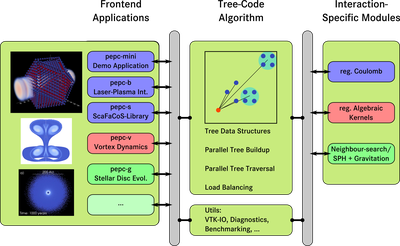

The code is structurally divided into three parts:

- kernel routines that handle all tree code specific data structures and communication as well as the actual tree traversal.

- interaction-specific modules, i.e. routines that apply to specific interaction kernels and multipole expansions. Currently, the following interaction kernels are available:

- Coulomb-interaction/gravitation,

- algebraic kernels for vortex methods,

- Darwin for magnetoinductive plasmas (no EM wave propagation),

- nearest-neighbour interactions for smooth particle hydrodynamics (SPH).

- 'front-end' applications. For example

- PEPC-mini, a skeleton molecular dynamics program with different diagnostics including VTK output for convenient visualization,

- PEPC-b, a code for laser- or particle beam-plasma interactions as well as plasma-wall interactions,

- PEPC-s, a library version for the ScaFaCoS project,

- PEPC-v, an application for simulating vortex dynamics using the vortex particle method,

- PEPC-dvh, vortex dynamics using the diffused vortex hydrodynamics method,

- PEPC-g, gravitational interaction and optional smooth particle hydrodynamics frontend (SPH) for simulating stellar discs consisting of gas and dust, developed together with Max Planck Institute for Radio Astronomy (MPIfR) Bonn,

- several internal experimental frontends.

Due to this structure, the adaption to further interaction kernels as well as additional applications and experimental variations to the tree code algorithm can be implemented conveniently.

Structure of the tree code framework. Currently, PEPC supports three interaction-specific modules and several respective frontends for different applications ranging from plasma physics through vortex-particle methods to smooth particle hydrodynamics. Due to well-defined interfaces, symbolized by the grey blocks, the implementation of further interaction models as well as additional frontends is straightforward.

Implementation details and scaling

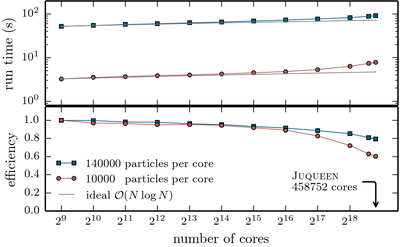

PEPC itself is written in Fortran 2003 with some C wrappers for POSIX functions. In addition to the main pthreads-parallelised tree traversal, a version based on OpenMP is available and there has also been a version using SMPSs. Besides the hybrid parallelisation, a number of improvements to the original Warren-Sallmon ‘hashed oct-tree’ scheme have been included and allow for an excellent scaling of the code on different architectures with up to 2,048,000,000 particles across up to 294,912 processors (at the then largest machine at JSC: JUGENE).

In addition, it has been adapted to > 32 parallel threads per MPI rank to take full advantage of the capabilities of the BlueGene/Q installation JUQUEEN that was available at JSC. As a result it showed great parallel scalability across the full machine and qualified for the High-Q Club.

Weak scaling and parallel efficiency of the parallel Barnes-Hut tree code PEPC across the full Blue Gene/Q installation JUQUEEN at JSC.

- LGPL-3.0+

- Open Access

Participating organisations

Reference papers

- 1.Author(s): Danilo Durante, Salvatore Marrone, Dirk Brömmel, Andrea Colagrossi, Robert Speck, Paul GibbonPublished by Zenodo in 202410.5281/zenodo.10640946

- 2.Author(s): Paul Gibbon, Lukas Arnold, Andreas Breslau, Dirk Brömmel, Junxian Chew, Andrea Colagrossi, Danilo Durante, Marvin-Lucas Henkel, Michael Hofmann, Helge Hübner, Marc Keldenich, Salvatore Marrone, Christian Salmagne, Lorenzo Siddi, Robert Speck, Benedikt Steinbusch, Mathias WinkelPublished by Zenodo in 202410.5281/zenodo.11035167

- 3.Author(s): Paul Gibbon, Dirk Brömmel, Junxian Chew, Benedikt Steinbusch, Mathias Winkel, Lorenzo Siddi, Robert Speck, Lukas Arnold, Christian Salmagne, Marvin-Lucas Henkel, Michael Hofmann, Helge Hübner, Andreas Breslau, Marc KeldenichPublished by Zenodo in 202310.5281/zenodo.7965549

- 4.Author(s): Junxian Chew, Dirk Brömmel, Paul GibbonPublished by Zenodo in 202310.5281/zenodo.7966171

Mentions

- 1.Author(s): Richard J. SadusPublished in Molecular Simulation of Fluids by Elsevier in 2024, page: 165-21310.1016/b978-0-323-85398-9.00014-9

- 2.Author(s): Yann Barsamian, Arthur Charguéraud, Alain KetterlinPublished in Lecture Notes in Computer Science, Parallel Processing and Applied Mathematics by Springer International Publishing in 2018, page: 133-14410.1007/978-3-319-78024-5_13

- 3.Author(s): Mustafa Abduljabbar, George S. Markomanolis, Huda Ibeid, Rio Yokota, David KeyesPublished in Lecture Notes in Computer Science, High Performance Computing by Springer International Publishing in 2017, page: 79-9610.1007/978-3-319-58667-0_5

- 4.Author(s): Carsten Weinhold, Adam Lackorzynski, Jan Bierbaum, Martin Küttler, Maksym Planeta, Hermann Härtig, Amnon Shiloh, Ely Levy, Tal Ben-Nun, Amnon Barak, Thomas Steinke, Thorsten Schütt, Jan Fajerski, Alexander Reinefeld, Matthias Lieber, Wolfgang E. NagelPublished in Lecture Notes in Computational Science and Engineering, Software for Exascale Computing - SPPEXA 2013-2015 by Springer International Publishing in 2016, page: 405-42610.1007/978-3-319-40528-5_18

- 5.Author(s): Robert Speck, Daniel Ruprecht, Rolf Krause, Matthew Emmett, Michael Minion, Mathias Winkel, Paul GibbonPublished in Lecture Notes in Computational Science and Engineering, Domain Decomposition Methods in Science and Engineering XXI by Springer International Publishing in 2014, page: 637-64510.1007/978-3-319-05789-7_61

- 6.Author(s): Speck Robert, Ruprecht Daniel, Emmett Matthew, Bolten Matthias, Krause RolfPublished in Advances in Parallel Computing, Parallel Computing: Accelerating Computational Science and Engineering (CSE) by IOS Press in 201410.3233/978-1-61499-381-0-263

- 7.Author(s): Michael KnoblochPublished in Advances in Computers, Green and Sustainable Computing: Part II by Elsevier in 2013, page: 1-7810.1016/b978-0-12-407725-6.00001-0

- 1.Author(s): Mayez A. Al-Mouhamed, Nazeeruddin Mohammad, Majid A. KhanPublished in 2017 IEEE 7th International Advance Computing Conference (IACC) by IEEE in 2017, page: 783-78810.1109/iacc.2017.0161

- 2.Author(s): Zahra Khatami, Hartmut Kaiser, Patricia Grubel, Adrian Serio, J. RamanujamPublished in 2016 7th Workshop on Latest Advances in Scalable Algorithms for Large-Scale Systems (ScalA) by IEEE in 2016, page: 57-6410.1109/scala.2016.012

- 3.Author(s): Keisuke Fukuda, Motohiko Matsuda, Naoya Maruyama, Rio Yokota, Kenjiro Taura, Satoshi MatsuokaPublished in 2016 IEEE 22nd International Conference on Parallel and Distributed Systems (ICPADS) by IEEE in 2016, page: 1100-110910.1109/icpads.2016.0145

- 4.Author(s): Matthias Lieber, Wolfgang E. NagelPublished in 2014 International Conference on High Performance Computing & Simulation (HPCS) by IEEE in 2014, page: 112-11910.1109/hpcsim.2014.6903676

- 5.Author(s): Olga Pearce, Todd Gamblin, Bronis R. de Supinski, Tom Arsenlis, Nancy M. AmatoPublished in Proceedings of the 28th ACM international conference on Supercomputing by ACM in 2014, page: 113-12210.1145/2597652.2597659

- 6.Author(s): Jeroen Bedorf, Evghenii Gaburov, Michiko S. Fujii, Keigo Nitadori, Tomoaki Ishiyama, Simon Portegies ZwartPublished in SC14: International Conference for High Performance Computing, Networking, Storage and Analysis by IEEE in 2014, page: 54-6510.1109/sc.2014.10

- 7.Author(s): Kenjiro Taura, Jun Nakashima, Rio Yokota, Naoya MaruyamaPublished in 2012 SC Companion: High Performance Computing, Networking Storage and Analysis by IEEE in 2012, page: 617-62510.1109/sc.companion.2012.86

- 8.Author(s): R. Speck, D. Ruprecht, R. Krause, M. Emmett, M. Minion, M. Winkel, P. GibbonPublished in 2012 International Conference for High Performance Computing, Networking, Storage and Analysis by IEEE in 2012, page: 1-1110.1109/sc.2012.6

- 9.Author(s): R. Speck, D. Ruprecht, R. Krause, M. Emmett, M. Minion, M. Winkel, P. GibbonPublished in 201210.5555/2388996.2389121

- 10.Author(s): Michael Hofmann, Gudula Runger, Paul Gibbon, Robert SpeckPublished in 2010 IEEE International Conference On Cluster Computing Workshops and Posters (CLUSTER WORKSHOPS) by IEEE in 2010, page: 1-810.1109/clusterwksp.2010.5613105

- 11.Author(s): Morris Riedel, Thomas Eickermann, Wolfgang Frings, Sonja Dominiczak, Daniel Mallmann, Thomas Dussel, Achim Streit, Paul Gibbon, Felix Wolf, Wolfram Schiffmann, Thomas LippertPublished in 2007 8th IEEE/ACM International Conference on Grid Computing by IEEE in 2007, page: 169-17610.1109/grid.2007.4354130

- 1.Author(s): Zixuan Cui, Lei Yang, Jing Wu, Guanghui HuPublished in Numerical Algorithms by Springer Science and Business Media LLC in 2024, page: 549-57110.1007/s11075-024-01888-8

- 2.Author(s): D. Durante, S. Marrone, D. Brömmel, R. Speck, A. ColagrossiPublished in Mathematics and Computers in Simulation by Elsevier BV in 2024, page: 528-54410.1016/j.matcom.2024.06.003

- 3.Author(s): Ilia Marchevsky, Evgeniya Ryatina, Alexandra KolganovaPublished in Computers & Fluids by Elsevier BV in 2023, page: 10601810.1016/j.compfluid.2023.106018

- 4.Author(s): Christoph Bauinger, Oscar P. BrunoPublished in Journal of Computational Physics by Elsevier BV in 2023, page: 11183710.1016/j.jcp.2022.111837

- 5.Author(s): Junxian Chew, Paul Gibbon, Dirk Brömmel, Tom Wauters, Yuri Gribov, Peter de VriesPublished in Nuclear Fusion by IOP Publishing in 2023, page: 01600310.1088/1741-4326/ad0796

- 6.Author(s): Bogdan Toader, Assaad Moawad, Thomas Hartmann, Francesco VitiPublished in Journal of Advanced Transportation by Wiley in 2021, page: 1-1510.1155/2021/5943567

- 7.Author(s): Volker Springel, Rüdiger Pakmor, Oliver Zier, Martin ReineckePublished in Monthly Notices of the Royal Astronomical Society by Oxford University Press (OUP) in 2021, page: 2871-294910.1093/mnras/stab1855

- 8.Author(s): Johannes Menzel, Christian Plessl, Tobias KenterPublished in ACM Transactions on Reconfigurable Technology and Systems by Association for Computing Machinery (ACM) in 2021, page: 1-3010.1145/3491235

- 9.Author(s): Jiahui Chen, Weihua Geng, Daniel R. ReynoldsPublished in Computer Physics Communications by Elsevier BV in 2021, page: 10774210.1016/j.cpc.2020.107742

- 10.Author(s): M.A. Khan, M.A. Al-Mouhamed, N. MohammadPublished in Astronomy and Computing by Elsevier BV in 2021, page: 10046610.1016/j.ascom.2021.100466

- 11.Author(s): Sk Aziz Ali, Kerem Kahraman, Christian Theobalt, Didier Stricker, Vladislav GolyanikPublished in IEEE Access by Institute of Electrical and Electronics Engineers (IEEE) in 2021, page: 79060-7907910.1109/access.2021.3084505

- 12.Author(s): Junxian Chew, Paul Gibbon, Dirk Brömmel, Tom Wauters, Yuri Gribov, Peter de VriesPublished in Plasma Physics and Controlled Fusion by IOP Publishing in 2021, page: 04501210.1088/1361-6587/abdd75

- 13.Author(s): Benjamin W. Ong, Jacob B. SchroderPublished in Computing and Visualization in Science by Springer Science and Business Media LLC in 202010.1007/s00791-020-00331-4

- 14.Author(s): Badri Munier, Muhammad Aleem, Majid Khan, Muhammad Arshad Islam, Muhammad Azhar Iqbal, Muhammad Kamran KhattakPublished in SN Applied Sciences by Springer Science and Business Media LLC in 202010.1007/s42452-020-2386-z

- 15.Author(s): Patrick Kilian, Cedric Schreiner, Felix SpanierPublished in Computer Physics Communications by Elsevier BV in 2018, page: 121-13410.1016/j.cpc.2018.04.014

- 16.Author(s): Matthias Lieber, Wolfgang E. NagelPublished in Future Generation Computer Systems by Elsevier BV in 2018, page: 575-59010.1016/j.future.2017.04.042

- 17.Author(s): Tian Huang, Yongxin Zhu, Yajun Ha, Xu Wang, Meikang QiuPublished in ACM Transactions on Embedded Computing Systems by Association for Computing Machinery (ACM) in 2018, page: 1-2010.1145/3157670

- 18.Author(s): Sebastian Rinke, Markus Butz-Ostendorf, Marc-André Hermanns, Mikaël Naveau, Felix WolfPublished in Journal of Parallel and Distributed Computing by Elsevier BV in 2018, page: 251-26610.1016/j.jpdc.2017.11.019

- 19.Published in Supercomputing Frontiers and Innovations by FSAEIHE South Ural State University (National Research University) in 201810.14529/jsfi180104

- 20.Author(s): M F Ciappina, J A Pérez-Hernández, A S Landsman, W A Okell, S Zherebtsov, B Förg, J Schötz, L Seiffert, T Fennel, T Shaaran, T Zimmermann, A Chacón, R Guichard, A Zaïr, J W G Tisch, J P Marangos, T Witting, A Braun, S A Maier, L Roso, M Krüger, P Hommelhoff, M F Kling, F Krausz, M LewensteinPublished in Reports on Progress in Physics by IOP Publishing in 2017, page: 05440110.1088/1361-6633/aa574e

- 21.Author(s): В.Н. ГоворухинPublished in Numerical Methods and Programming (Vychislitel'nye Metody i Programmirovanie) by Research Computing Center Lomonosov Moscow State University in 2017, page: 175-18610.26089/nummet.v18r215

- 22.Author(s): Lorenzo Siddi, Giovanni Lapenta, Paul GibbonPublished in Physics of Plasmas by AIP Publishing in 201710.1063/1.4994705

- 23.Author(s): Yifan Gong, Bingsheng He, Dan LiPublished in IEEE Transactions on Computers by Institute of Electrical and Electronics Engineers (IEEE) in 2017, page: 672-68710.1109/tc.2016.2609387

- 24.Author(s): Ya S Lavrinenko, I V Morozov, I A ValuevPublished in Journal of Physics: Conference Series by IOP Publishing in 2016, page: 01214810.1088/1742-6596/774/1/012148

- 25.Author(s): A. Halavanau, P. PiotPublished in Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment by Elsevier BV in 2016, page: 144-15310.1016/j.nima.2016.03.002

- 26.Author(s): Y. S. Lavrinenko, I.V. Morozov, I. A. ValuevPublished in Contributions to Plasma Physics by Wiley in 2016, page: 448-45810.1002/ctpp.201500139

- 27.Author(s): Benedikt Steinbusch, Paul Gibbon, Richard D. SydoraPublished in Physics of Plasmas by AIP Publishing in 201610.1063/1.4952638

- 28.Author(s): Zahra Khatami, Hartmut Kaiser, Patricia Grubel, Adrian Serio, J. RamanujamPublished in 201610.5555/3019094.3019102

- 29.Author(s): F. Bülow, P. Hamberger, H. Nirschl, W. DörflerPublished in Computer Physics Communications by Elsevier BV in 2016, page: 107-12010.1016/j.cpc.2016.03.017

- 30.Author(s): Si-Yi Huang, Rainer Spurzem, Peter BerczikPublished in Research in Astronomy and Astrophysics by IOP Publishing in 2016, page: 01110.1088/1674-4527/16/1/011

- 31.Author(s): Seid Koric, Anshul GuptaPublished in Computer Methods in Applied Mechanics and Engineering by Elsevier BV in 2016, page: 281-29210.1016/j.cma.2016.01.011

- 32.Author(s): Mariano Vázquez, Guillaume Houzeaux, Seid Koric, Antoni Artigues, Jazmin Aguado-Sierra, Ruth Arís, Daniel Mira, Hadrien Calmet, Fernando Cucchietti, Herbert Owen, Ahmed Taha, Evan Dering Burness, José María Cela, Mateo ValeroPublished in Journal of Computational Science by Elsevier BV in 2016, page: 15-2710.1016/j.jocs.2015.12.007

- 33.Author(s): Junchao Zhang, Babak Behzad, Marc SnirPublished in IEEE Transactions on Parallel and Distributed Systems by Institute of Electrical and Electronics Engineers (IEEE) in 2015, page: 1861-187310.1109/tpds.2014.2331243

- 34.Author(s): Álvaro Fernández-González, Rafael Rosillo, José Ángel Miguel-Dávila, Vicente MatellánPublished in The Journal of Supercomputing by Springer Science and Business Media LLC in 2015, page: 4476-450310.1007/s11227-015-1544-3

- 35.Author(s): Robert Speck, Daniel Ruprecht, Matthew Emmett, Michael Minion, Matthias Bolten, Rolf KrausePublished in BIT Numerical Mathematics by Springer Science and Business Media LLC in 2014, page: 843-86710.1007/s10543-014-0517-x

- 36.Author(s): C Salmagne, D Reiter, P GibbonPublished in Journal of Physics: Conference Series by IOP Publishing in 2014, page: 01201410.1088/1742-6596/561/1/012014

- 37.Author(s): Hualong Feng, Amlan Barua, Shuwang Li, Xiaofan LiPublished in Communications in Computational Physics by Global Science Press in 2014, page: 365-38710.4208/cicp.220812.220513a

- 38.Author(s): Orlando Ayala, Hossein Parishani, Liu Chen, Bogdan Rosa, Lian-Ping WangPublished in Computer Physics Communications by Elsevier BV in 2014, page: 3269-329010.1016/j.cpc.2014.09.005

- 39.Author(s): Omar Awile, Ivo F. SbalzariniPublished in ACM Transactions on Mathematical Software by Association for Computing Machinery (ACM) in 2014, page: 1-1510.1145/2558889

- 40.Author(s): Roman G Bystryi, Igor V MorozovPublished in Journal of Physics B: Atomic, Molecular and Optical Physics by IOP Publishing in 2014, page: 01540110.1088/0953-4075/48/1/015401

- 41.Author(s): S.S. Sarraf, J. D’Elía, L. Battaglia, E.J. LópezPublished in Revista Internacional de Métodos Numéricos para Cálculo y Diseño en Ingeniería by Scipedia, S.L. in 2014, page: 211-22010.1016/j.rimni.2013.07.005

- 42.Author(s): ZeCheng Gan, ZhenLi XuPublished in Science China Mathematics by Springer Science and Business Media LLC in 2014, page: 1331-134010.1007/s11425-014-4783-5

- 43.Author(s): Michael Knobloch, Maciej Foszczynski, Willi Homberg, Dirk Pleiter, Hans BöttigerPublished in Computer Science - Research and Development by Springer Science and Business Media LLC in 2013, page: 211-21910.1007/s00450-013-0245-5

- 44.Author(s): Henry A. Boateng, Robert KrasnyPublished in Journal of Computational Chemistry by Wiley in 2013, page: 2159-216710.1002/jcc.23371

- 45.Author(s): R. YokotaPublished in Journal of Algorithms & Computational Technology by SAGE Publications in 2013, page: 301-32410.1260/1748-3018.7.3.301

- 46.Author(s): Weihua Geng, Robert KrasnyPublished in Journal of Computational Physics by Elsevier BV in 2013, page: 62-7810.1016/j.jcp.2013.03.056

- 47.Author(s): M. Winkel, P. GibbonPublished in Contributions to Plasma Physics by Wiley in 2013, page: 254-26210.1002/ctpp.201200097

- 48.Author(s): Mathias Winkel, Robert Speck, Helge Hübner, Lukas Arnold, Rolf Krause, Paul GibbonPublished in Computer Physics Communications by Elsevier BV in 2012, page: 880-88910.1016/j.cpc.2011.12.013

- 49.Author(s): R. Speck, L. Arnold, P. GibbonPublished in Journal of Computational Science by Elsevier BV in 2011, page: 138-14310.1016/j.jocs.2011.01.011

- 50.Author(s): Paul Gibbon, Robert Speck, Anupam Karmakar, Lukas Arnold, Wolfgang Frings, Benjamin Berberich, Detlef Reiter, Martin MasekPublished in IEEE Transactions on Plasma Science by Institute of Electrical and Electronics Engineers (IEEE) in 2010, page: 2367-237610.1109/tps.2010.2055165

- 1.Author(s): Mustafa Abduljabbar, George Markomanolis, Huda Ibeid, Rio Yokota, David KeyesPublished by arXiv in 201710.48550/arxiv.1702.05459

- 2.Author(s): Ciappina, MF, Pérez-Hernández, JA, Landsman, AS, Okell, W, Zherebtsov, S, Förg, B, Schötz, J, Seiffert, JL, Fennel, T, Shaaran, T, Zimmermann, T, Chacón, A, Guichard, R, Zaïr, A, Tisch, JWG, Marangos, JP, Witting, T, Braun, A, Maier, SA, Roso, L, Krüger, M, Hommelhoff, P, Kling, MF, Krausz, F, Lewenstein, MPublished in 2016

- 3.Author(s): Huang, Si-Yi, Spurzem, Rainer, Berczik, PéterPublished in 2016

- 4.Author(s): Dirk Brömmel, Estela Suarez, Boris Orth, Stephan Gräf, Ulrich Detert, D. Pleiter, Michael Stephan, Thomas LippertPublished in 2014

- 5.Author(s): Omar Awile, Ivo F. SbalzariniPublished in 2014

- 6.Author(s): Robert Speck, Daniel Ruprecht, Matthew Emmett, Matthias Bolten, Rolf KrausePublished by arXiv in 201310.48550/arxiv.1307.7867

- 7.Author(s): Rio YokotaPublished by arXiv in 201210.48550/arxiv.1209.3516