FairMQ

FairMQ is designed to help implement large-scale data processing workflows needed in next-generation particle physics experiments.

Cite this software

Description

FairMQ in a nutshell

Next-generation Particle Physics Experiments at GSI/FAIR and CERN are facing unprecedented data processing challenges. Expected data rates require a non-trivial amount of high performance compute (HPC) resources in the order of thousands of CPU/GPU cores per experiment. Online (synchronous) data processing (compression) is crucial to stay within storage capacity limits. The complexity of tasks that need to be performed during the online data processing is significantly higher than ever before. Classically, complex tasks like calibration and track finding run in an offline (asynchronous) environment. Now they have to run online in a high performance and high throughput environment.

The FairMQ C++ library is designed to aid the implementation of such large-scale online data processing workflows by

- providing an asynchronous message passing abstraction that integrates different existing data transport technologies (no need to re-invent the wheel),

- providing a reasonably efficient data transport service (zero-copy, high throughput - TCP, SHMEM and RDMA (removed in v1.5+) implementations available),

- being data format agnostic (suitable data formats are usually experiment-specific), and

- providing further basic building blocks such as a simple state machine-based execution framework and a plugin mechanism to integrate with external config/control systems.

FairMQ is not an end-user application, but a library and framework used by software experts to implement higher-level experiment-specific applications.

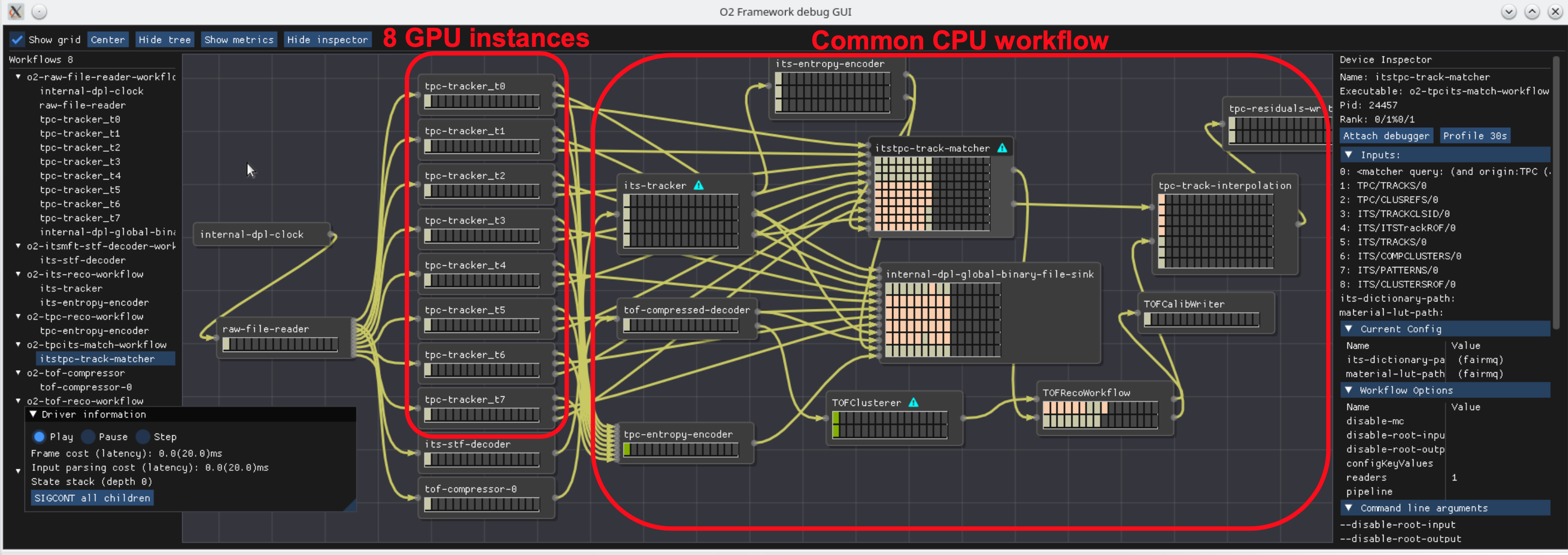

Screenshot of AliceO2 Debug GUI showing the data processing workflow of a single event processing node.

Screenshot of AliceO2 Debug GUI showing the data processing workflow of a single event processing node.

The screenshot shows a visualization of the data processing workflow on a single Alice event processing node (The "O2 Framework debug GUI" tool in the screenshot is part of the AliceO2 project). Data logically flows along the yellow edges (in this case via the FairMQ shmem data transport) through the various processing stages of which some are implemented as GPU and others as CPU algorithms.

Initially designed with the online data processing in mind, FairMQ has been successfully used to parallelize offline simulation and analysis workloads as well.

- LGPL-3.0-only

Participating organisations

Mentions

- 1.Author(s): Mohammad Al-Turany, Alexey Rybalchenko, Dennis Klein, Matthias Kretz, Dmytro Kresan, Radoslaw Karabowicz, Andrey Lebedev, Anar Manafov, Thorsten Kollegger, Florian UhligPublished in EPJ Web of Conferences by EDP Sciences in 2020, page: 0502110.1051/epjconf/202024505021

- 2.Author(s): Dennis Klein, Alexey Rybalchenko, Mohammad Al-Turany, Thorsten KolleggerPublished in EPJ Web of Conferences by EDP Sciences in 2019, page: 0502210.1051/epjconf/201921405022

- 3.Author(s): Giulio Eulisse, Piotr Konopka, Mikolaj Krzewicki, Matthias Richter, David Rohr, Sandro WenzelPublished in EPJ Web of Conferences by EDP Sciences in 2019, page: 0501010.1051/epjconf/201921405010

- 4.Author(s): Dario Berzano, Roel Deckers, Costin Grigoras¸, Michele Floris, Peter Hristov, Mikolaj Krzewicki, Markus ZimmermannPublished in EPJ Web of Conferences by EDP Sciences in 2019, page: 0504510.1051/epjconf/201921405045

- 5.Author(s): Sandro WenzelPublished in EPJ Web of Conferences by EDP Sciences in 2019, page: 0202910.1051/epjconf/201921402029

- 6.Author(s): Alexey Rybalchenko, Dennis Klein, Mohammad Al-Turany, Thorsten KolleggerPublished in EPJ Web of Conferences by EDP Sciences in 2019, page: 0502910.1051/epjconf/201921405029

- 7.Author(s): M. Al-Turany, P. Buncic, P. Hristov, T. Kollegger, C. Kouzinopoulos, A. Lebedev, V. Lindenstruth, A. Manafov, M. Richter, A. Rybalchenko, P. Vande Vyvre, N. WincklerPublished in Journal of Physics: Conference Series by IOP Publishing in 2015, page: 07200110.1088/1742-6596/664/7/072001

- 8.Author(s): M Al-Turany, D Klein, A Manafov, A Rybalchenko, F UhligPublished in Journal of Physics: Conference Series by IOP Publishing in 2014, page: 02200110.1088/1742-6596/513/2/022001